Primer

Log entry = (Term, Index, Command)

in RDBMS, Log=WAL/redo log, FSM=records

Components

- FSM

- LogStore

- LastIndex

- FirstIndex

- StableStore

- CurrentTerm

- LastVoteTerm

- LastVoteCand

- SnapshotStore

- PeerStore

RPC Protocol

msgpack serializer

- AppendEntries

Leader发起

If got reponse with Success=false,then step down(退位)- Term

Each term begins with an election - Leader

partition后,old leader在复制日志时,通过它发现new leader,并step down - PrevLogIndex, PrevLogTerm

它们确保在相同term/index上的log内容完全一致, 而且之前的所有log内容一致:safety - LeaderCommitIndex

只有committed log才能被FSM Apply - []Log

- Term, Index

- Type

- LogCommand

复制日志 - LogAddPeer

- LogRemovePeer

- LogNoop

- LogBarrier

- LogCommand

- Term

- RequestVote

Candidate发起,if not Granted,step down to follower

leader/candidate也可能收到RequestVote

发起投票时,可能会收到AppendEntries,比较term来决定进入follower状态还是拒绝- Term

- LastLogIndex, LogLogTerm

选民如果发现candidate的log没有自己的新,则拒绝投票

阻止一个不包含所有committed entries的candidate成为leader

- InstallSnapshot

Leader发起

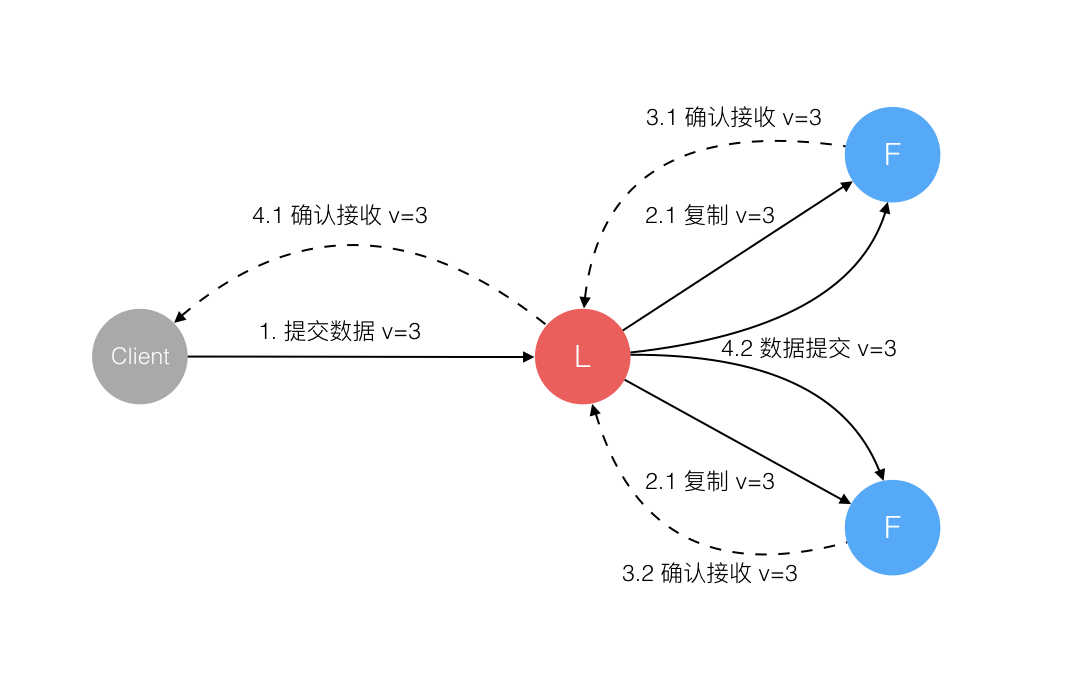

2PC

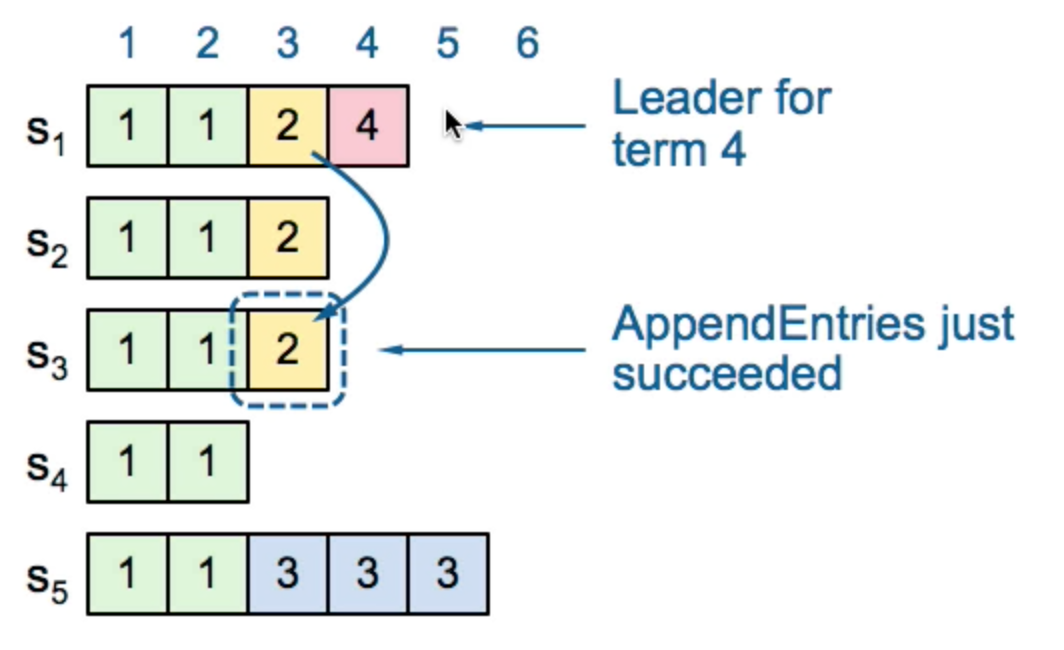

Leader’s log is ‘the truth’

2.1是第一次AppendEntries,3.1后Leader apply to local FSM并update commit index,就可以向client返回了

4.2是下一次AppendEntries,leader通知followers最新的commit index,followers才会apply to FSM

When the Commit Index is updated, the node will pass all commands between the new and old Commit Index to the state machine.

phase2的作用:

uncommitted log是可能回滚的

Commit

For a leader to decide an entry is committed

- must be stored on majority

- at least one new entry from current term must also be stored on majority

Election

leader要给peers发心跳,阻止新选举(如果一直没有Apply,多久发心跳?)

处理RequestVote RPC

Replication

leader保存每个followerReplication状态

|

|

Repair follower logs

- delete extraneous entries

- fill in missing entries

startup

|

|

runFSM

|

|

runLeader

|

|

appendEntries RPC

|

|

runCandidate

|

|

Configuration Change

采用2PC方法: C_old -> C_old+new -> C_new

http://zookeeper.apache.org/doc/trunk/zookeeperReconfig.html

zookeeper 3.5.0开始,也有了动态修改cluster的功能

Q & A

time

|

|

为什么每个node上的current term需要持久化?

It is best guess, persistent for recovery after crash.

恢复时,从leader拿不行吗?

为什么每个node上的votedFor要持久化?

为了保证election safety: allow at most one winner per term

term1,A vote for B,然后A crash,等A恢复了,如果voteFor不持久化,可能它对term1又vote for C了

成为leader后,立刻发heartbeat还是等heartbeat timeout?

如果一个follower的AppendEntries失败,leader怎么处理?

一直retry,Leader’s log is ‘the truth’.

Log是幂等的,因为有term/index,可以很容易排重

但在client方面,就没有保障了:

如果leader crash after executing command but before responding?

client如果盲目retry,有可能造成重复执行

解决办法:client在发送log时,在每个命令上加入id,确保幂等

leader上保存每个follower的index

Engineering

Config

HeartbeatTimeout = ElectionTimeout = 1s

LeaderLeaseTimeout = 500ms

CommitTimeout = 50ms

MaxAppendEntries = 64

SnapshotInterval = 2m

SnapshotThreshold = 8192

Group Commit

0 < MaxAppendEntries <= 1024

|

|

Lease

除了follower通过被动接受心跳来检测leader存活,leader本身也通过与majority follower

的response来判断自己是否已经被partition了,如果是,进入Follower状态

Pipeline

仅仅用于AppendEntries,通过channel实现多次发送RPC给follower而不等待response

但如果有错误响应,立刻取消pipeline模式

max outstanding AppendEntries RPC calls = 128

Limitations

Apply([]Log)

只能在leader上发起,follower没有自动redispatch

applyCh是no buffer的StableStore.GetUint64

如果没有找到key,返回的error必须是”not found”LeaderCh() might lose event

Paxos

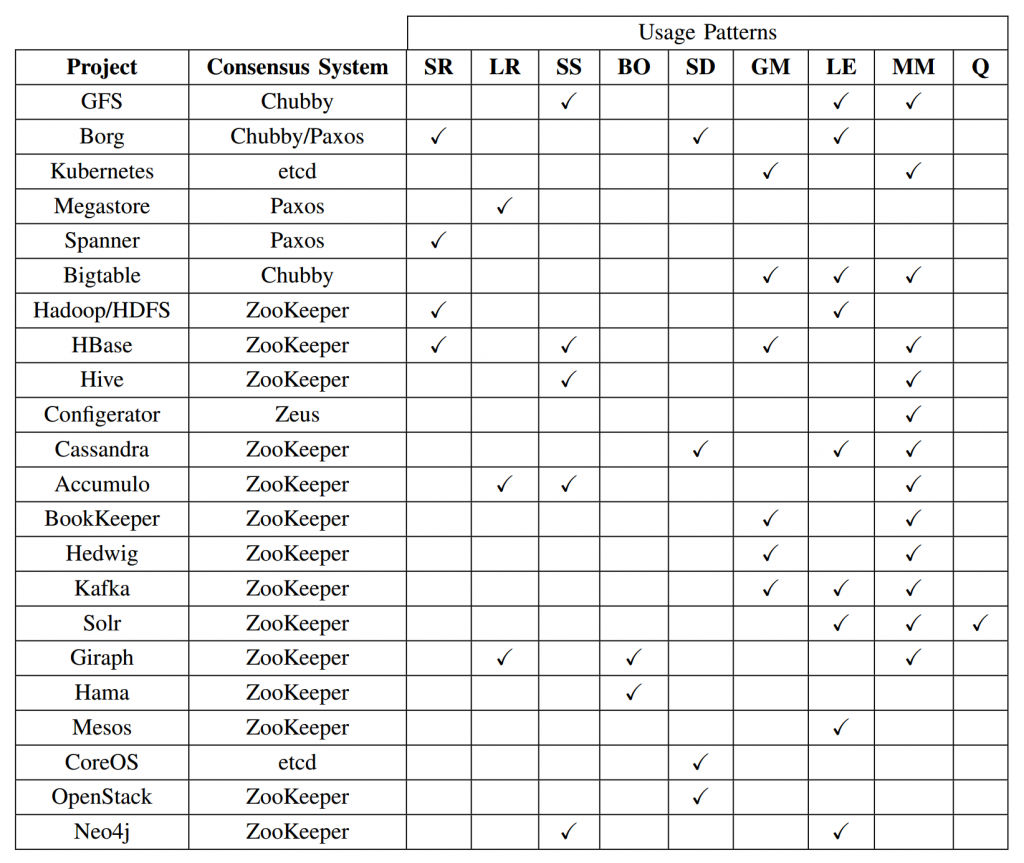

server replication (SR), log replication (LR), synchronisation service (SS), barrier orchestration (BO), service discovery (SD), leader election (LE), metadata management (MM), and Message Queues (Q).

CAP

证明:

在一个network partition的2个节点,现在有两个client分别向他们发送冲突的请求,如果要C,那么必然有一个节点要拒绝:牺牲A;如果要A,必然牺牲C

References

1985 FLP Impossibility Result

1988 Oki and Liskov’s Viewstamped Repication

1998 Lamport’s original Paxos paper “Part-Time Parliment”

2000 Brewer proposes CAP conjecture during Keynote at PODC

2002 Proof of CAP by Gilbert and Lynch(CAP 2年后才被正式证明)

2005 Lamport’s Technical Report on Generalized Consensus & Paxos

2013 Raft Consensus Paper first available online

http://www.cnblogs.com/foxmailed/p/3418143.html

http://raftuserstudy.s3-website-us-west-1.amazonaws.com/study/raft.pptx

http://www.read.seas.harvard.edu/~kohler/class/08w-dsi/chandra07paxos.pdf

https://www.cse.buffalo.edu//~demirbas/publications/cloudConsensus.pdf

http://www.cl.cam.ac.uk/techreports/UCAM-CL-TR-857.pdf